Nate Silver 2012

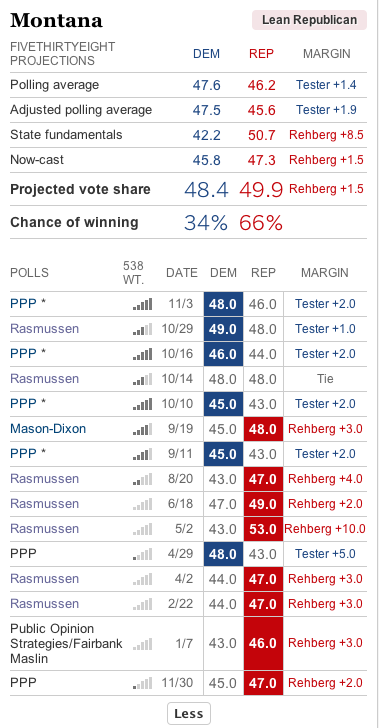

Just before the 2012 election, Nate Silver forecast (right)there was a 66 percent chance that Denny Rehberg would win the election for U.S. Senate, defeating Jon Tester by 1.5 percent. But when the votes were counted, Tester was victorious, winning with a 3.7 percent plurality.

Why did Silver wrongly predict Tester v. Rehberg? And, given he was wrong in 2012, could he be wrong now that John Walsh has only a 20 percent chance of beating Steve Daines?

The answers:

Friday last, Silver reappeared with his fundamentals, predicting there’s only a 20 percent chance that John Walsh will be elected to the U.S. Senate in November. Not surprisingly, the Democratic Senatorial Campaign Committee took issue with that, reminding everyone that Silver had wrongly predicted Jon Tester would lose in 2012.

Tester consistently polled behind Rehberg throughout most of 2012, pulling ahead only after Labor Day. On 5 April 2012, I compared Tester’s re-election polling performance with his polling performance in 2006, when he maintained a very small lead throughout the campaign. To the dismay and irritation of Democrats, I concluded that Tester was in trouble.

But he was in trouble, even with a warchest stuffed with millions of dollars. Around Labor Day, however, his fortunes improved.

My 5 November 2012 analysis of publicly available polls put Tester’s lead at 0.6 percent, and gave Tester 3:2 odds (60 percent) of winning. That estimate was conservative, as five of the six polls taken on or after 28 October 2012 had Tester leading by at least a point (the Mason-Dixon poll commissioned by the Lee newspapers was an outlier in Rehbergland). Unlike Silver, I didn’t apply a “fundamentals” adjustment to the result of my aggregation of the polls. Neither, if memory serves me, did Sam Wang at Princeton, and other aggregators.

A quick-‘n-dirty primer on poll aggregation

Poll aggregators combine a number of polls taken at the same time and asking the same question to increase the sample size and thus reduce the margin of error. A poll that sampled 600 has a margin of error of four percent at the 95 percent confidence level. Aggregating four 600-samples polls produces an MOE of two percent. A second benefit of looking at clusters of polls is that identifying outliers becomes possible. In 2012, for example, it was evident that the Mason-Dixon polls commissioned by the Lee newspapers were over-estimating Rehberg’s popularity.

We do not choose our clothing for a Labor Day picnic on the basis of a weather forecast made two months before Memorial Day. Long range forecasts are more guess than anything else because the data on which they are based are few and sometimes of dubious provenance, something Silver himself acknowledges:

Furthermore, much of the polling comes from firms such as Rasmussen Reports and Public Policy Polling, which have poor track records, employ dubious methodologies, or both. So the most appropriate use of polls at this stage is to see whether they roughly match our assessment of the race based on the fundamentals. Where there is a mismatch, it could indicate that the polls are missing something, that our view of the fundamentals is incorrect, or some of both — and it means there is more uncertainty in the outlook for the state.

In 2012, Silver’s fundamentals were noise, not signal. Had he based his forecast on the polls, he would have predicted Tester’s victory.

In March, 2014, Silver doesn’t have much to work with other than his fundamentals. Two early robo-polls, PPP and Rasmussen, report a majority favor Daines, and that he leads Walsh by 14–17 percent with 9–13 percent undecided. That a majority favor Daines should worry Democrats, but the gap between the candidates will close after the primary and as the election approaches. Moreover, these early polls do not usefully account for the Libertarian candidate, Roger Roots.

Here’s what we have to work with so far in 2014:

Nevertheless, the polls conducted by the candidates and their political parties probably are reporting similar results. That can’t be helping Walsh, especially with fundraising (the first quarter of 2014 reporting period ends on 31 March, so be prepared to be asked to donate during the next four days).

Silver can be wrong, but he’d widely know and generally widely respected. That’s why the DSCC’s swift and hard pushback was necessary. If Democrats lose heart because of Silver’s prediction, they may throw in the towel now and make Silver’s prediction that Walsh will lose to Daines a self-fulfilling prophecy.

A decade ago, the polls by the Lee newspapers (Missoulian, Billings Gazette, Montana Standard, etc.) were important sources of information that the newspapers dribbled out bit-by-bit over most of a week. In 2012, the Lee polls, conducted by Mason-Dixon, were way off the mark. Lee should rethink its approach to election polls and adopt the aggregation approach employed by Silver, Wang, and others. If the aggregation can’t be performed in-house, Montana has two fine state universities with excellent political scientists and statisticians who could be hired as aggregators, preferably by a consortium of the state’s major dailies and television stations.